Companies throughout each trade are rolling out AI companies this yr. For Microsoft, Oracle, Perplexity, Snap and lots of of different main firms, utilizing the NVIDIA AI inference platform — a full stack comprising world-class silicon, methods and software program — is the important thing to delivering high-throughput and low-latency inference and enabling nice person experiences whereas decreasing value.

NVIDIA’s developments in inference software program optimization and the NVIDIA Hopper platform are serving to industries serve the most recent generative AI fashions, delivering glorious person experiences whereas optimizing whole value of possession. The Hopper platform additionally helps ship as much as 15x extra vitality effectivity for inference workloads in comparison with earlier generations.

AI inference is notoriously troublesome, because it requires many steps to strike the appropriate steadiness between throughput and person expertise.

However the underlying aim is easy: generate extra tokens at a decrease value. Tokens signify phrases in a big language mannequin (LLM) system — and with AI inference companies usually charging for each million tokens generated, this aim affords probably the most seen return on AI investments and vitality used per job.

Full-stack software program optimization affords the important thing to bettering AI inference efficiency and attaining this aim.

Price-Efficient Person Throughput

Companies are sometimes challenged with balancing the efficiency and prices of inference workloads. Whereas some prospects or use circumstances may fit with an out-of-the-box or hosted mannequin, others might require customization. NVIDIA applied sciences simplify mannequin deployment whereas optimizing value and efficiency for AI inference workloads. As well as, prospects can expertise flexibility and customizability with the fashions they select to deploy.

NVIDIA NIM microservices, NVIDIA Triton Inference Server and the NVIDIA TensorRT library are among the many inference options NVIDIA affords to go well with customers’ wants:

- NVIDIA NIM inference microservices are prepackaged and performance-optimized for quickly deploying AI basis fashions on any infrastructure — cloud, information facilities, edge or workstations.

- NVIDIA Triton Inference Server, one of many firm’s hottest open-source tasks, permits customers to bundle and serve any mannequin whatever the AI framework it was educated on.

- NVIDIA TensorRT is a high-performance deep studying inference library that features runtime and mannequin optimizations to ship low-latency and high-throughput inference for manufacturing purposes.

Accessible in all main cloud marketplaces, the NVIDIA AI Enterprise software program platform consists of all these options and gives enterprise-grade help, stability, manageability and safety.

With the framework-agnostic NVIDIA AI inference platform, firms save on productiveness, improvement, and infrastructure and setup prices. Utilizing NVIDIA applied sciences also can enhance enterprise income by serving to firms keep away from downtime and fraudulent transactions, improve e-commerce buying conversion charges and generate new, AI-powered income streams.

Cloud-Primarily based LLM Inference

To ease LLM deployment, NVIDIA has collaborated carefully with each main cloud service supplier to make sure that the NVIDIA inference platform may be seamlessly deployed within the cloud with minimal or no code required. NVIDIA NIM is built-in with cloud-native companies akin to:

- Amazon SageMaker AI, Amazon Bedrock Market, Amazon Elastic Kubernetes Service

- Google Cloud’s Vertex AI, Google Kubernetes Engine

- Microsoft Azure AI Foundry coming quickly, Azure Kubernetes Service

- Oracle Cloud Infrastructure’s information science instruments, Oracle Cloud Infrastructure Kubernetes Engine

Plus, for custom-made inference deployments, NVIDIA Triton Inference Server is deeply built-in into all main cloud service suppliers.

For instance, utilizing the OCI Knowledge Science platform, deploying NVIDIA Triton is so simple as turning on a swap within the command line arguments throughout mannequin deployment, which immediately launches an NVIDIA Triton inference endpoint.

Equally, with Azure Machine Studying, customers can deploy NVIDIA Triton both with no-code deployment by the Azure Machine Studying Studio or full-code deployment with Azure Machine Studying CLI. AWS gives one-click deployment for NVIDIA NIM from SageMaker Market and Google Cloud gives a one-click deployment possibility on Google Kubernetes Engine (GKE). Google Cloud gives a one-click deployment possibility on Google Kubernetes Engine, whereas AWS affords NVIDIA Triton on its AWS Deep Studying containers.

The NVIDIA AI inference platform additionally makes use of standard communication strategies for delivering AI predictions, mechanically adjusting to accommodate the rising and altering wants of customers inside a cloud-based infrastructure.

From accelerating LLMs to enhancing artistic workflows and reworking settlement administration, NVIDIA’s AI inference platform is driving real-world influence throughout industries. Learn the way collaboration and innovation are enabling the organizations beneath to attain new ranges of effectivity and scalability.

Serving 400 Million Search Queries Month-to-month With Perplexity AI

Perplexity AI, an AI-powered search engine, handles over 435 million month-to-month queries. Every question represents a number of AI inference requests. To fulfill this demand, the Perplexity AI workforce turned to NVIDIA H100 GPUs, Triton Inference Server and TensorRT-LLM.

Supporting over 20 AI fashions, together with Llama 3 variations like 8B and 70B, Perplexity processes numerous duties akin to search, summarization and question-answering. By utilizing smaller classifier fashions to route duties to GPU pods, managed by NVIDIA Triton, the corporate delivers cost-efficient, responsive service beneath strict service stage agreements.

By way of mannequin parallelism, which splits LLMs throughout GPUs, Perplexity achieved a threefold value discount whereas sustaining low latency and excessive accuracy. This best-practice framework demonstrates how IT groups can meet rising AI calls for, optimize whole value of possession and scale seamlessly with NVIDIA accelerated computing.

Lowering Response Instances With Recurrent Drafter (ReDrafter)

Open-source analysis developments are serving to to democratize AI inference. Just lately, NVIDIA integrated Redrafter, an open-source strategy to speculative decoding printed by Apple, into NVIDIA TensorRT-LLM.

ReDrafter makes use of smaller “draft” modules to foretell tokens in parallel, that are then validated by the principle mannequin. This method considerably reduces response instances for LLMs, significantly during times of low visitors.

Remodeling Settlement Administration With Docusign

Docusign, a pacesetter in digital settlement administration, turned to NVIDIA to supercharge its Clever Settlement Administration platform. With over 1.5 million prospects globally, Docusign wanted to optimize throughput and handle infrastructure bills whereas delivering AI-driven insights.

NVIDIA Triton offered a unified inference platform for all frameworks, accelerating time to market and boosting productiveness by remodeling settlement information into actionable insights. Docusign’s adoption of the NVIDIA inference platform underscores the constructive influence of scalable AI infrastructure on buyer experiences and operational effectivity.

“NVIDIA Triton makes our lives simpler,” mentioned Alex Zakhvatov, senior product supervisor at Docusign. “We not have to deploy bespoke, framework-specific inference servers for our AI fashions. We leverage Triton as a unified inference server for all AI frameworks and in addition use it to establish the appropriate manufacturing situation to optimize cost- and performance-saving engineering efforts.”

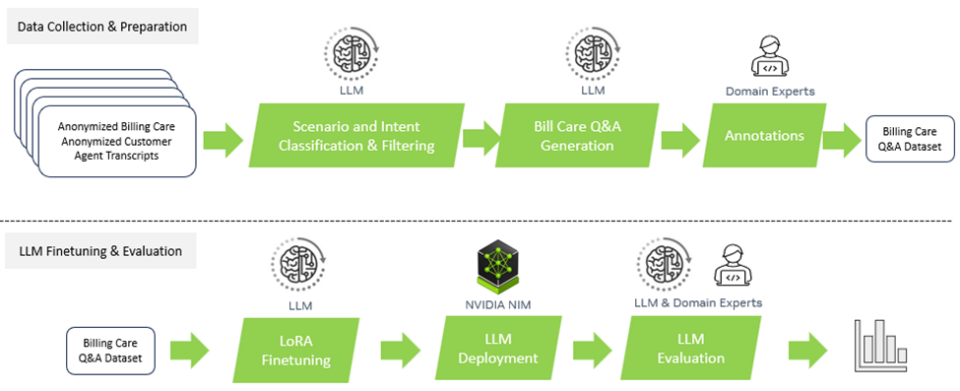

Enhancing Buyer Care in Telco With Amdocs

Amdocs, a number one supplier of software program and companies for communications and media suppliers, constructed amAIz, a domain-specific generative AI platform for telcos as an open, safe, cost-effective and LLM-agnostic framework. Amdocs is utilizing NVIDIA DGX Cloud and NVIDIA AI Enterprise software program to supply options primarily based on commercially obtainable LLMs in addition to domain-adapted fashions, enabling service suppliers to construct and deploy enterprise-grade generative AI purposes.

Utilizing NVIDIA NIM, Amdocs lowered the variety of tokens consumed for deployed use circumstances by as much as 60% in information preprocessing and 40% in inferencing, providing the identical stage of accuracy with a considerably decrease value per token, relying on varied elements and volumes used. The collaboration additionally lowered question latency by roughly 80%, guaranteeing that finish customers expertise close to real-time responses. This acceleration enhances person experiences throughout commerce, customer support, operations and past.

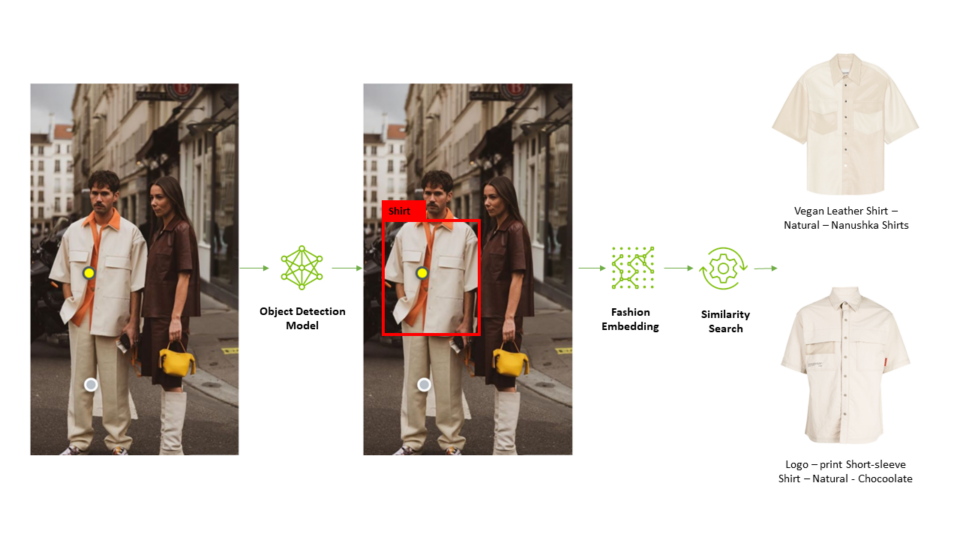

Revolutionizing Retail With AI on Snap

Looking for the right outfit has by no means been simpler, due to Snap’s Screenshop characteristic. Built-in into Snapchat, this AI-powered software helps customers discover trend objects seen in pictures. NVIDIA Triton performed a pivotal function in enabling Screenshop’s pipeline, which processes photos utilizing a number of frameworks, together with TensorFlow and PyTorch.

By consolidating its pipeline onto a single inference serving platform, Snap considerably lowered improvement time and prices whereas guaranteeing seamless deployment of up to date fashions. The result’s a frictionless person expertise powered by AI.

“We didn’t need to deploy bespoke inference serving platforms for our Screenshop pipeline, a TF-serving platform for TensorFlow and a TorchServe platform for PyTorch,” defined Ke Ma, a machine studying engineer at Snap. “Triton’s framework-agnostic design and help for a number of backends like TensorFlow, PyTorch and ONNX was very compelling. It allowed us to serve our end-to-end pipeline utilizing a single inference serving platform, which reduces our inference serving prices and the variety of developer days wanted to replace our fashions in manufacturing.”

Following the profitable launch of the Screenshop service on NVIDIA Triton, Ma and his workforce turned to NVIDIA TensorRT to additional improve their system’s efficiency. By making use of the default NVIDIA TensorRT settings in the course of the compilation course of, the Screenshop workforce instantly noticed a 3x surge in throughput, estimated to ship a staggering 66% value discount.

Monetary Freedom Powered by AI With Wealthsimple

Wealthsimple, a Canadian funding platform managing over C$30 billion in belongings, redefined its strategy to machine studying with NVIDIA’s AI inference platform. By standardizing its infrastructure, Wealthsimple slashed mannequin supply time from months to beneath quarter-hour, eliminating downtime and empowering groups to ship machine studying as a service.

By adopting NVIDIA Triton and working its fashions by AWS, Wealthsimple achieved 99.999% uptime, guaranteeing seamless predictions for over 145 million transactions yearly. This transformation highlights how strong AI infrastructure can revolutionize monetary companies.

“NVIDIA’s AI inference platform has been the linchpin in our group’s ML success story, revolutionizing our mannequin deployment, lowering downtime and enabling us to ship unparalleled service to our purchasers,” mentioned Mandy Gu, senior software program improvement supervisor at Wealthsimple.

Elevating Inventive Workflows With Let’s Improve

AI-powered picture technology has reworked artistic workflows and may be utilized to enterprise use circumstances akin to creating personalised content material and imaginative backgrounds for advertising visuals. Whereas diffusion fashions are highly effective instruments for enhancing artistic workflows, the fashions may be computationally costly.

To optimize its workflows utilizing the Steady Diffusion XL mannequin in manufacturing, Let’s Improve, a pioneering AI startup, selected the NVIDIA AI inference platform.

Let’s Improve’s newest product, AI Photoshoot, makes use of the SDXL mannequin to remodel plain product pictures into stunning visible belongings for e-commerce web sites and advertising campaigns.

With NVIDIA Triton’s strong help for varied frameworks and backends, coupled with its dynamic batching characteristic set, Let’s Improve was in a position to seamlessly combine the SDXL mannequin into current AI pipelines with minimal involvement from engineering groups, releasing up their time for analysis and improvement efforts.

Accelerating Cloud-Primarily based Imaginative and prescient AI With OCI

Oracle Cloud Infrastructure (OCI) built-in NVIDIA Triton to energy its Imaginative and prescient AI service, enhancing prediction throughput by as much as 76% and lowering latency by 51%. These optimizations improved buyer experiences with purposes together with automating toll billing for transit companies and streamlining bill recognition for international companies.

With Triton’s hardware-agnostic capabilities, OCI has expanded its AI companies portfolio, providing strong and environment friendly options throughout its international information facilities.

“Our AI platform is Triton-aware for the advantage of our prospects,” mentioned Tzvi Keisar, a director of product administration for OCI’s information science service, which handles machine studying for Oracle’s inside and exterior customers.

Actual-Time Contextualized Intelligence and Search Effectivity With Microsoft

Azure affords one of many widest and broadest picks of digital machines powered and optimized by NVIDIA AI. These digital machines embody a number of generations of NVIDIA GPUs, together with NVIDIA Blackwell and NVIDIA Hopper methods.

Constructing on this wealthy historical past of engineering collaboration, NVIDIA GPUs and NVIDIA Triton now assist speed up AI inference in Copilot for Microsoft 365. Accessible as a devoted bodily keyboard key on Home windows PCs, Microsoft 365 Copilot combines the facility of LLMs with proprietary enterprise information to ship real-time contextualized intelligence, enabling customers to reinforce their creativity, productiveness and abilities.

Microsoft Bing additionally used NVIDIA inference options to handle challenges together with latency, value and velocity. By integrating NVIDIA TensorRT-LLM strategies, Microsoft considerably improved inference efficiency for its Deep Search characteristic, which powers optimized internet outcomes.

Deep search walkthrough courtesy of Microsoft

Microsoft Bing Visible Search permits folks around the globe to seek out content material utilizing pictures as queries. The guts of this functionality is Microsoft’s TuringMM visible embedding mannequin that maps photos and textual content right into a shared high-dimensional house. As a result of it operates on billions of photos throughout the online, efficiency is important.

Microsoft Bing optimized the TuringMM pipeline utilizing NVIDIA TensorRT and NVIDIA acceleration libraries together with CV-CUDA and nvImageCodec. These efforts resulted in a 5.13x speedup and vital TCO discount.

Unlocking the Full Potential of AI Inference With {Hardware} Innovation

Bettering the effectivity of AI inference workloads is a multifaceted problem that calls for progressive applied sciences throughout {hardware} and software program.

NVIDIA GPUs are on the forefront of AI enablement, providing excessive effectivity and efficiency for AI fashions. They’re additionally probably the most vitality environment friendly: NVIDIA accelerated computing on the NVIDIA Blackwell structure has lower the vitality used per token technology by 100,000x prior to now decade for inference of trillion-parameter AI fashions.

The NVIDIA Grace Hopper Superchip, which mixes NVIDIA Grace CPU and Hopper GPU architectures utilizing NVIDIA NVLink-C2C, delivers substantial inference efficiency enhancements throughout industries.

Unlocking Advertiser Worth With Meta Andromeda’s Business-Main ML

Meta Andromeda is utilizing the superchip for environment friendly and high-performing personalised adverts retrieval. By creating deep neural networks with elevated compute complexity and parallelism, on Fb and Instagram it has achieved an 8% advert high quality enchancment on choose segments and a 6% recall enchancment.

With optimized retrieval fashions and low-latency, high-throughput and memory-IO conscious GPU operators, Andromeda affords a 100x enchancment in characteristic extraction velocity in comparison with earlier CPU-based elements. This integration of AI on the retrieval stage has allowed Meta to steer the trade in adverts retrieval, addressing challenges like scalability and latency for a greater person expertise and better return on advert spend.

As cutting-edge AI fashions proceed to develop in measurement, the quantity of compute required to generate every token additionally grows. To run state-of-the-art LLMs in actual time, enterprises want a number of GPUs working in live performance. Instruments just like the NVIDIA Collective Communication Library, or NCCL, allow multi-GPU methods to rapidly trade massive quantities of information between GPUs with minimal communication time.

Future AI Inference Improvements

The way forward for AI inference guarantees vital advances in each efficiency and value.

The mixture of NVIDIA software program, novel strategies and superior {hardware} will allow information facilities to deal with more and more advanced and numerous workloads. AI inference will proceed to drive developments in industries akin to healthcare and finance by enabling extra correct predictions, sooner decision-making and higher person experiences.

As these traits proceed to evolve, it’s important that organizations keep updated and use the most recent inference optimizations to maximise their investments and stay aggressive within the period of AI.

Be taught extra about how NVIDIA is delivering breakthrough inference efficiency outcomes and keep updated with the most recent AI inference efficiency updates.